The Skunkworks Project

When you’re in the consulting space, you’re constantly looking at technology stacks and working to help your clients find the right fit with the right innovation curve. Snowflake quickly became that in the Data Warehousing sphere as it solved so many of the core issues in data consumption. The disruption of the engrained workarounds that organizations had accepted as “normal” was game-changing in the data management space. Many introductions to Snowflake (especially back in 2016) started with incredulity, then later amazement. In those early years, the benefits of moving to Snowflake were compelling even without so many “nice to haves”. For example, Snowflake didn’t have any way of issuing Stored Procedures until 2018, but despite that, it was revolutionizing the corporate data to information pipeline with other more compelling features. Suddenly audiences were getting the information they needed and data volume was a nonissue. Additionally, there was no physical barrier to entry. If you were a small startup, you could get started with an on-demand footprint. This was perfect because you would only pay if your company took off.

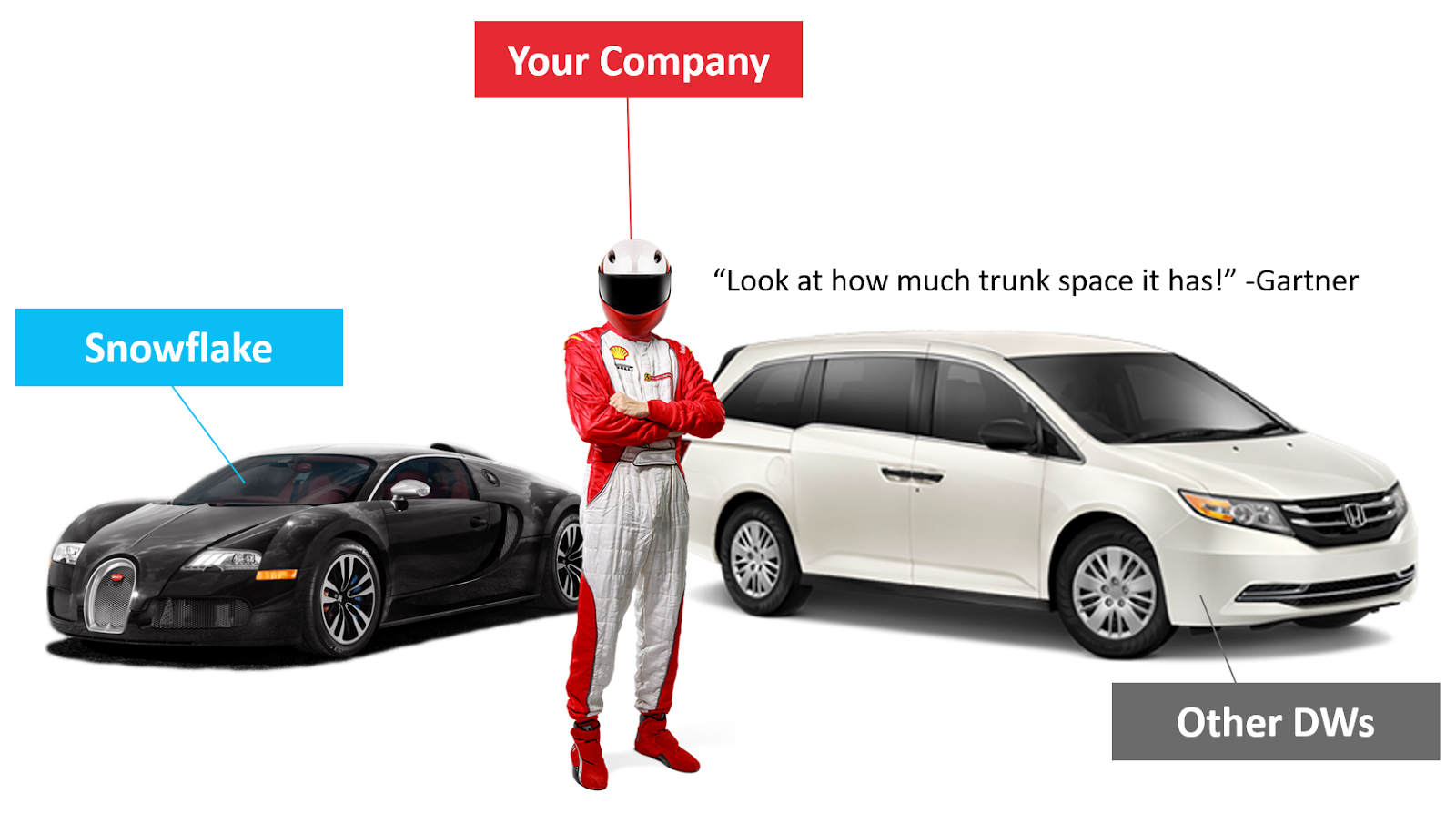

The differences were so stark that in 2019 when Gartner came out with their evaluation of Snowflake and compared to all the other data warehousing databases, there was a bit of a collective eye roll for those that were in the trenches. This may have prompted some interesting social posts from your humble white paper author...

Gartner was missing the reason why companies invest in Data Warehouses to begin with. However, it was enough to get larger companies to consider Snowflake in competitive bids, even if it was a dark horse. But all Snowflake needed was for prospects to get a peek and the deal was in the bag.

Their progress and the draw of large crowds to their regular events didn’t go unnoticed by some of the larger players. There were constant rumors swirling around about a [insert vendor name] Skunkworks project to release a “Snowflake killer” right around the corner. This usually became the most common objection to clients moving forward with a project. “Have you heard of [insert vendor name]’s new solution they’re building? I’ve heard that they will bury Snowflake with it.”

As stated in the beginning, the job of a good technology consulting organization is to make sure that the customer is on good footing for the long run. So these Skunkworks projects were very important to stay on top of. However, as the solution previews would release there seemed to always be a Price is Right loser horn playing in the background. The marketing was making these projects seem so exciting, but the new lipstick on the old pig didn’t fool customers. That’s not to say there weren’t decent strides made, but they weren’t “Snowflake killers.” Before you deem this author a Snowflake fanboy, let’s just clarify that unseating Snowflake is doable, but let’s go over 3 things competitors should NOT do.

#1 Don’t Repackage Your Technical Debt

Product companies don’t talk about their existing code as “technical debt” but if they’re going to compete head-to-head with real innovations, they’ll need to start from a clean slate. They can’t lure themselves into the Innovator's Dilemma by weighing their modernization down with old code dusted off to do new tricks.

Why This is Hard

Building from scratch is expensive, time-consuming, and not necessarily guaranteed to prove successful. There are probably about 30 people in the world that could write a marketable database from scratch (one that could actually be sold). So it's VERY tempting to use code that you’ve already invested in. But therein lies the problem. Most old code was designed for a very different ecosystem of hardware and software than exists in the cloud today. Attempting to completely unwind that code is an effort unto itself, so developers will often socket the code or build surrounding scripts to “cloudify” it.

Why You Can’t Beat Snowflake Without It

In Data Warehousing the inefficiency of the database engine’s code is particularly susceptible to exposure. This is because the processing of the code happens in such large volumes. The strategy taken early in the engines development compounds downstream either for better or worse. The more sacrifices you make, the greater your performance loss will be. When Snowflake’s founders created their codebase, it started from a mindset that said,  “if I could build the ideal engine what would it look like?” Then they built the layers of code from 0 to what it is today.

“if I could build the ideal engine what would it look like?” Then they built the layers of code from 0 to what it is today.

Not everything in Snowflake was built with 100% foresight, of course. There were many unforeseen requirements, but their solution wasn’t to find some old code off the shelf in order to address the issues.

#2 Don’t Get Distracted by the Existing Architecture

#2 is particularly challenging for cloud vendors. They have all done an amazing job of building a foundation of compute and storage which services can be built on. Those foundations have enabled countless innovations and are really the bedrock that any new data platform should be built from. However, cloud vendors haven’t been sitting on their hands with these foundations. On top of them, the vendors have constructed ancillary services which they call their own. These are services like SQL databases, NoSQL databases, containerization solutions, orchestration tools, replication solutions, etc. These ultimately make up an architecture that has been sold to customers as the default ecosystem of services. Independently, these services solve very distinct problems and work fine for most generic customer use cases. But that doesn’t mean those solutions have what it takes to construct a future Snowflake killer.

Why This is Hard

Put yourself in the shoes of a cloud vendor. How do you justify not using existing services within your architecture despite the fact that it might not be ideal for performance at scale? Starting from scratch and building up from there is not easy when you have pressures to be part of an “ecosystem.” The cloud vendors need to be able to act as a “jack of all trades” with many interchangeable services that can be combined to create powerful solutions. The downside to that approach is that it leaves their macro solutions in a position of being “master of none.” More specifically, this exposes their customers to the responsibility of being the managers of all these services which they have to configure, tune, and maintain.

Why You Can’t Beat Snowflake Without It

Snowflake’s code is written to abundantly use what the cloud vendors do best: compute and storage. Cloud vendors have to be exceptional at those two functions because their entire backbone of services relies on it. But for Snowflake, this is largely where the reliance on the cloud vendor ends. The remaining services are all internal to Snowflake’s codebase, which is why they have been so successful at making the jump from AWS to Azure and then Google Cloud. The specialization of the internal functions makes it highly efficient, and their internal operation happens automatically without requiring the client to own the maintenance of its cogs. So things like indexing, partitioning, and vacuuming happen automatically. This doesn’t mean there is no data engineering on Snowflake, but it does mean they can get to the meat and potatoes of the end task without having to manage some 3rd under-the-hood service.

#3 Don’t Build for a Single Cloud

#3 is something that is far more doable for the dark horse competition than it is for the big cloud vendors and the reason is obvious. But of the vendors that were born in the cloud and don’t offer their service outside of that, Snowflake stands alone in its cross-cloud capabilities. Cloudera and DataBricks have the ability to work on various cloud ecosystems as an adaptation to their previous on-premise solutions built to service Hadoop. However, a vast majority of Snowflakes competition is going head-to-head with the native solutions provided by the big cloud vendors, so that’s where I’m going to focus.

Why This is Hard

The cloud vendors are literally at battle with each other. The “all-in” bids have full executive backing as we saw in the multi-billion dollar JEDI contract between Azure and AWS. Extending olive branches to create alliances doesn’t appear to be a priority at the moment.

Why You Can’t Beat Snowflake Without It

When you clear the fog, the big cloud vendors are offering two core points of value: compute and storage. Everything else is built from those two foundational capabilities. Over time, that compute and storage will be just like any other commodity.

Let’s run a thought experiment. Do you have a General Electric cell phone, No? Why not? It’s powered by a General Electric turbine many miles away… Wouldn’t you want to use an integrated phone with your electric system? For that matter, why doesn’t Siemens have their own cell phone line?

This kind of thinking seems silly now, but it wasn’t so silly in the 1890s when Westinghouse and GE were similarly at each other's throats building products that used their electricity. This is something Intricity wrote about in 2019 in a whitepaper titled “The Commoditization of Networks.”

The networks for compute and storage will succumb to the same forces over the long run and most services in the future will be just as agnostic as your power outlet.

Where Snowflake Loses

Snowflake isn’t untouchable… yet (more on that later).

“Going All In”

Seemingly the most common play that Snowflake loses to is the “we’re going all-in on [insert cloud vendor here]”. If customers are committed to the cloud vendor as “a package” then this is where we see Snowflake not being invited. The cloud vendors know this all too well, so the tendency to bundle or offer global consumption as “credits” is pretty common.

While Snowflake cuts a check every year to their cloud hosts, these hosts are often uneasy about Snowflake moving from the role of a Data Warehouse to the role of a Data Lake. They see this as encroaching on their blob storage architectures which is often the springboard for other downstream services. Ironically, undergirding Snowflake storage is the cloud vendor's blob. So the sales reps from these cloud vendors complain that Snowflake gets all the cake every time they’re introduced. Not to mention the fact that Snowflake offers clients an easy way of replicating data to their competitors’ platforms. So this results in an odd frenemies relationship that has them all grins together and grimaces in private. This is why the big cloud platform sales reps and their corporate marketing machines push hard for the “all-in” play.

On-Prem

If you’re not planning on putting your data into the cloud, then… it's a nonstarter. Those organizations do exist, but it’s not a model that Snowflake supports.

Machine Learning/AI

In 2018, Snowflake and DataBricks announced a partnership that looked like it would become something. However, the relationship stalled about as fast as it started. The decision on who would process the compute seemed to loom heavy on the proposed architecture plans. The reality was that in 80% of the data processing use cases (which mostly involve data manipulation), Snowflake didn’t really need DataBricks. The latter 20% of requirements which constituted the Data Science teams were really the target audience for the partnership. However, these lines of demarcation were rarely agreed on by both parties. There’s no shortage of Machine Learning/AI vendors besides DataBricks which are more than willing to fill that 20% need within the Snowflake community. However, in conversations that are strictly about ML, Snowflake is often never invited to compete.

Snowflake Could Become Untouchable

In 2016, Snowflake started pitching the idea of what it called at the time “Data Sharing.” The idea was that their customers could securely share data with 3rd parties. Initially the framework for this idea was fairly simplistic. Basically the Snowflake customer would designate a secured view of data that could be shared with a 3rd party. When the 3rd party accessed the secured view they would interact with it using standard SQL. This was vastly better than the old managed file transfers corporations were managing with partners. The idea had a following and Snowflake signed-on many companies interested in the trend. However, Data Sharing didn’t really do much in terms of managing the transaction of selling data. So if a customer wanted to go to market with their data using Data Sharing, they would have to manage their own billing process to upsell the Snowflake compute costs or choose to absorb it.

From its inception, Data Sharing was headed to a much grander vision… an “Apps Store” for Data. If there is one thing that could turn Snowflake into an untouchable company it’s this very feature and it’s well on its way. Since early 2019, Snowflake has been partnering with a variety of companies to participate in its Data Marketplace. Companies across a wide spectrum of data providers have jumped in.

Then COVID hit and demand for external data grew around epidemiology, testing, contact tracing, and outbreaks. Snowflake was at the right place and the right time to hit that trend.

If Snowflake can build a position of being the world's data broker… they will create a significant moat around their castle. Just think about it, what other platforms would you choose if the world's shareable data was natively accessible and ready for integration via SQL? Not to mention the fact that corporations can now have an avenue to easily monetize data that is sharable…

So while other platforms are busy getting their technology to compete with Snowflake’s capabilities, Snowflake is skating “to where the puck is going, not where it has been.” – Wayne Gretzky

Who is Intricity?

Intricity is a specialized selection of over 100 Data Management Professionals, with offices located across the USA and Headquarters in New York City. Our team of experts has implemented in a variety of Industries including, Healthcare, Insurance, Manufacturing, Financial Services, Media, Pharmaceutical, Retail, and others. Intricity is uniquely positioned as a partner to the business that deeply understands what makes the data tick. This joint knowledge and acumen has positioned Intricity to beat out its Big 4 competitors time and time again. Intricity’s area of expertise spans the entirety of the information lifecycle. This means when you’re problem involves data; Intricity will be a trusted partner. Intricity's services cover a broad range of data-to-information engineering needs:

What Makes Intricity Different?

While Intricity conducts highly intricate and complex data management projects, Intricity is first a foremost a Business User Centric consulting company. Our internal slogan is to Simplify Complexity. This means that we take complex data management challenges and not only make them understandable to the business but also make them easier to operate. Intricity does this through using tools and techniques that are familiar to business people but adapted for IT content.

Thought Leadership

Intricity authors a highly sought after Data Management Video Series targeted towards Business Stakeholders at https://www.intricity.com/videos. These videos are used in universities across the world. Here is a small set of universities leveraging Intricity’s videos as a teaching tool:

Talk With a Specialist

If you would like to talk with an Intricity Specialist about your particular scenario, don’t hesitate to reach out to us. You can write us an email: specialist@intricity.com

(C) 2023 by Intricity, LLC

This content is the sole property of Intricity LLC. No reproduction can be made without Intricity's explicit consent.

Intricity, LLC. 244 Fifth Avenue Suite 2026 New York, NY 10001

Phone: 212.461.1100 • Fax: 212.461.1110 • Website: www.intricity.com